Live waveform display (aka oscilloscope)

One of the nice things about building a digital synthesizer is that everything is just code. My code is responsible for generating a series of integers that my DAC turns into audio, but I can do a lot of other things along the way as long as I can deliver those integers to the DAC in time (or a buffer underrun will occur, something that used to be a common occurrence making computer music).

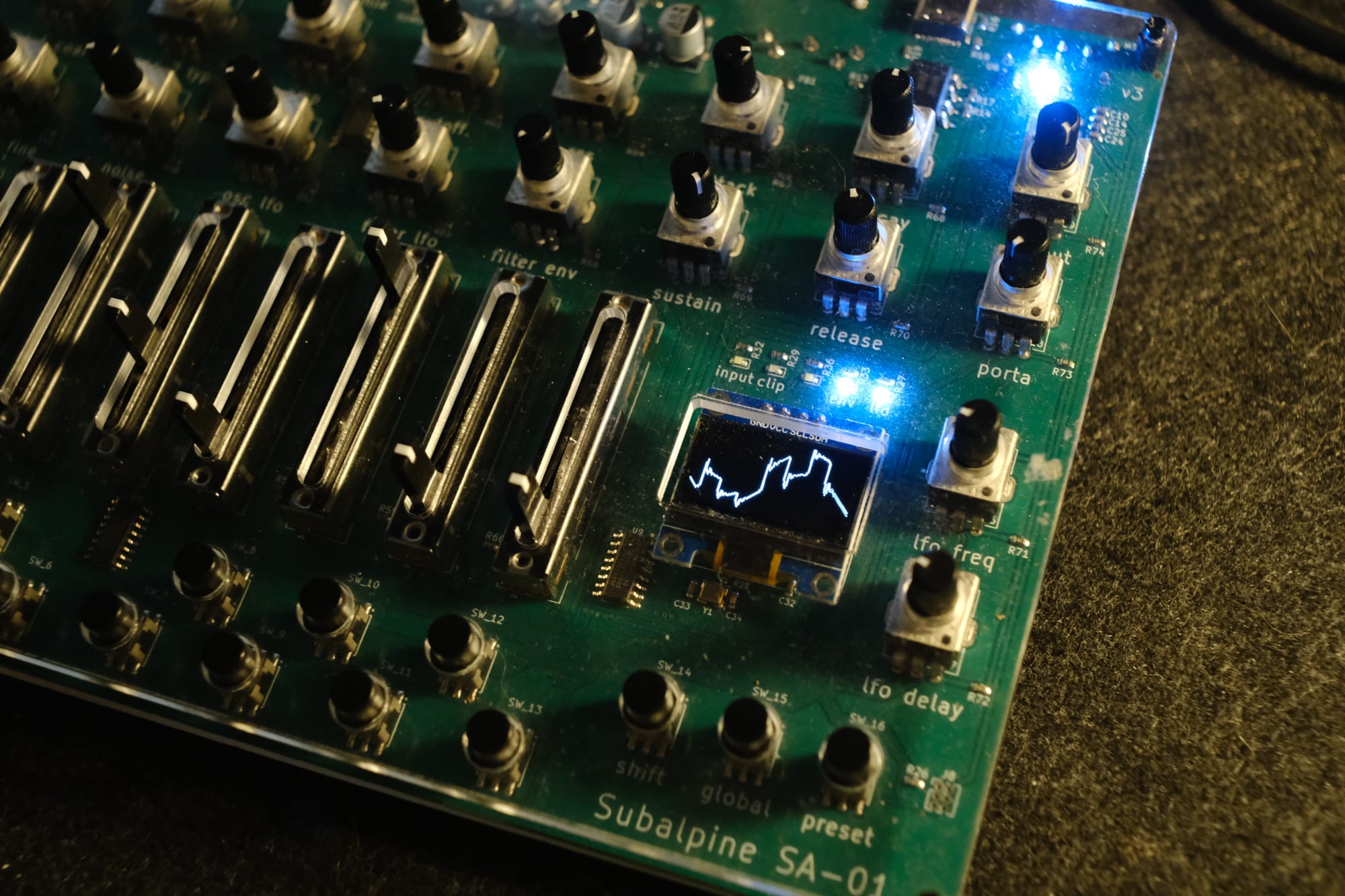

For example, I wanted to generate a realtime oscilloscope view of the waveform being generated. The final result looks pretty good:

Resonant filter makes for neat shapes

Building a single cycle oscilloscope on its own would be a challenge - a general purpose scope has to be able to determine the frequency of an incoming signal in realtime, and if it contains many frequencies, which does it pick? If you've ever had to fiddle with the tracking on a bench scope you'll know what I mean. In my case however, I'm the one generating all of those samples. I know exactly when a cycle starts and ends, and even though it's a polyphonic synthesizer (e.g. can play 6 notes at once), I can decide which single frequency waveform to display. It's just data - numbers I'm already doing the work of generating to make sound, so it's just a matter of picking the right numbers and getting them to the right place for displaying a screen (along with some scaling).

The code for doing this is only a handful of lines. It's a little sloppy - the FPU on the ESP32S3 is surprisingly capable, so I'm doing more floating point operations than I should, but here's the idea. In Voice.h, some setup:

float prevPhase = 0.0f;

std::array<float, 128> pendingBuffer = {0};

std::array<float, 128> outputBuffer = {0};

uint8_t lastPendingBufferIndex = 0;

Voice.h

And in Voice::Process(), which generates a sample for a single voice:

float newPhase = this->wavetableOscs[0].GetPhase();

// if osc1 has reset, reset the output buffer

if (newPhase < prevPhase) {

outputBuffer = pendingBuffer;

pendingBuffer = {0};

}

uint8_t index = static_cast<uint8_t>(newPhase * 128);

// interpolate in the case that a buffer has fewer than 128 samples

if (index - lastPendingBufferIndex > 1) {

for (uint8_t i = lastPendingBufferIndex; i < index; i++) {

pendingBuffer[i] = pendingBuffer[lastPendingBufferIndex];

}

}

pendingBuffer[index] = processed;

lastPendingBufferIndex = index;

prevPhase = newPhase;Voice::Process()

It's pretty straightforward - we initialize two buffers of size 128 (the OLED is 128 pixels wide) and a few variables for keeping track of where we are. Every time we generate a sample, we check the phase of the primary oscillator (I've chosen to sync the oscilloscope to the primary oscillator, but it could be the secondary). If it has wrapped around, we know the pending buffer is complete - assign the output buffer and carry on. The rest of the code is just mapping the floating point phase value to the correct index in the pending buffer. This includes accounting for the case that there are fewer phase indices than the width of the display - as we go through the array, hang onto the previous sample, drop it in until we get to a new one. A possible improvement would be linear interpolation here, but a) I think it looks cool with the quantized waveform and b) I'd have to rework this a bit to have dynamic length arrays - or deal with extra floating point math in the sample generation loop - and I don't really want to do either!

Cool looking chunky waveform (fewer than 128 samples in a cycle)

In the main audio loop, where we gather up and sum the samples from the voices and send samples to the DAC, we grab a pointer to the latest completed buffer from the last used voice and assign it to a variable in the displayState struct. This means that the scope will always be displaying the last played note, which feels correct to me (displaying a true summed output of all voices would be complicated and look less interesting).

// update the display's scope view buffer to show the latest voice

state.displayState.outputBuffer = state.voices[state.lastVoiceIndex].GetOutputBuffer();

state.displayState.outputMultiplier = state.activePreset->amp;

Audio loop code, assigning output buffer for display

And finally in the display code - iterate through each horizontal pixel, clamp (in the case of a clipping sample, the filter is quite resonant) & normalize the vertical pixel, then either draw it to the framebuffer directly or connect it to the previous vertical pixel with a line.

ssd1306_clear_screen(display, 0);

uint8_t prevY;

auto outputBuffer = *state.outputBuffer;

for (int i = 0; i < 128; i++) {

// fclamp to hard clip signal

float normalized = fclamp(((-outputBuffer[i] * state.outputMultiplier) + 1.0f) / 2.0f, 0.0f, 1.0f) * 64.f;

uint8_t y = static_cast<uint8_t>(normalized);

if (i == 0) {

ssd1306_fill_point(display, i, y, 1);

} else {

if (abs(prevY - y) > 1) {

ssd1306_draw_line(display, i - 1, prevY, i, y);

} else {

ssd1306_fill_point(display, i, y, 1);

}

}

prevY = y;

}

ssd1306_refresh_gram(display);The display libary can push the framebuffer out at about 45 hz over I2C, so this looks really cool.

Here's a more interesting demo, running MIDI to the device for the bassline from my 2012 track "With You ft. Kotomi":

Demo with actual music!